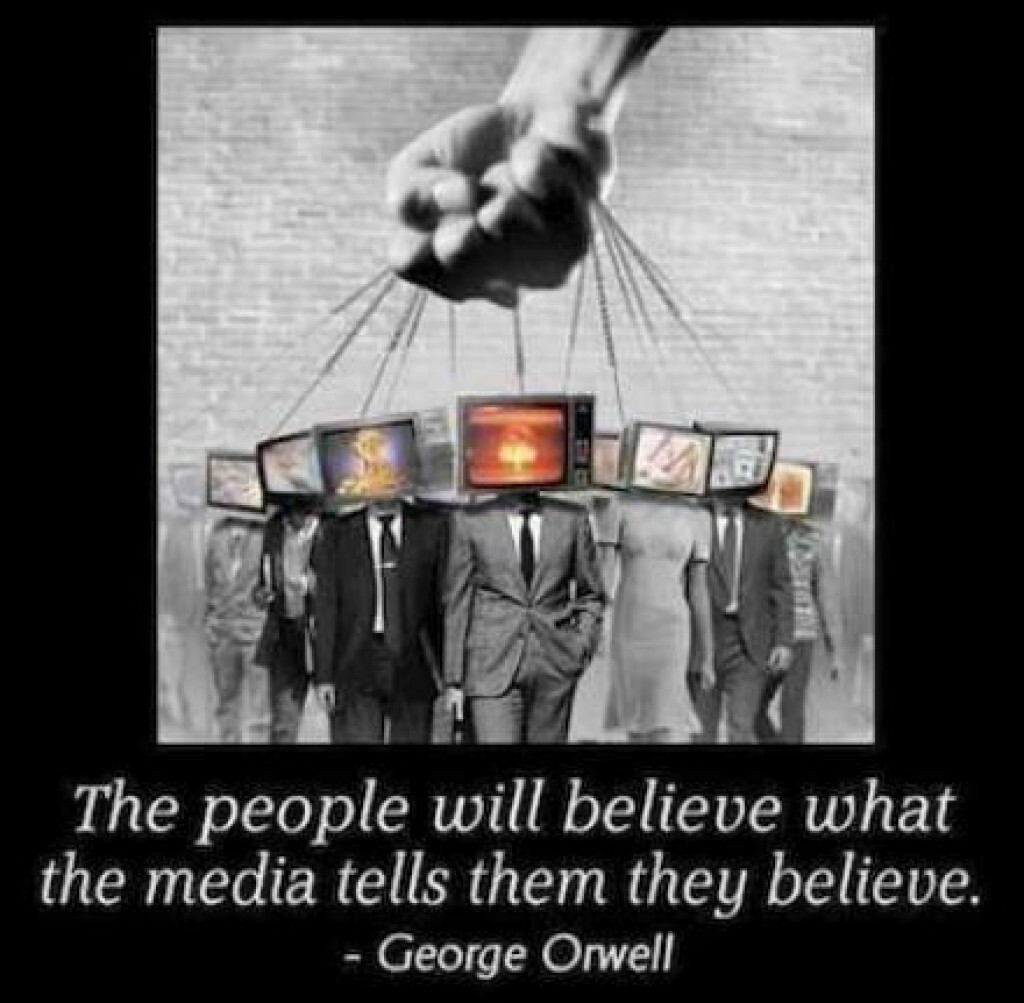

Does this not exactly describe what is happening in the US lately? Rather than figuring things out for themselves, or doing their own research, people are just blindly believing that what CNN or MSNBC or <insert any other here> tells them must be the truth. When did critical thinking stop being a thing taught to children? Schools clearly don't do it, but why do parents not do teach it? In the space of 30 years it feels like we've seen the creation of an ideological culture that cannot think for themselves and relies entirely upon the media for their truth.